Generative AI is no longer a sci-fi talking point. It is on every student’s phone, in many tutors’ workflows, and increasingly in the tools centres use to design and mark assessments. That creates enormous opportunity, time saved, personalised help, new ways to check understanding, and equally large responsibilities for providers, assessors and learners.

Key Takeaways:

- Generative AI brings useful tools, but legal obligations remain: UK GDPR principles apply and ICO guidance expects transparency, fairness and risk assessment.

- Centres and awarding organisations remain accountable for assessment validity: AI cannot replace human judgement. Regulators such as Ofqual stress fairness, validity and public confidence.

- Use a layered approach to integrity: Clear policies, honest declarations, assessment design changes, and human verification rather than over-reliance on detection tools.

- Teach learners to use AI responsibly: It is a workplace skill. Policies should protect assessment integrity while not excluding legitimate assistive use.

This blog explains the legal, ethical and practical lines you must watch, while keeping things readable and practical for people who assess and quality-assure vocational qualifications in the UK.

From Sci-Fi to Study Desk: Generative AI in Education

AI isn't just for futuristic labs. Chatbots, text generators, and automated marking tools are here now.

For learners, it's a

'study buddy'.

For assessors, it's a

'helpful intern'.

The key is to use it wisely — understanding AI in education, assessment integrity, and responsible AI use.

What Must Organisations Remember About Data Protection And AI?

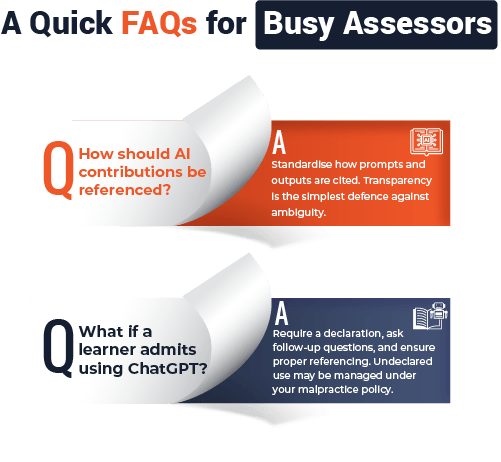

Any time an AI system uses personal data, whether to personalise feedback, store learner submissions for training, or suggest marks, the UK GDPR and the Information Commissioner's Office (ICO) rules apply. Organisations must think about lawful bases for processing, purpose limitation (do not use data for other reasons without clear justification), fairness, transparency, and security. The ICO expects organisations to be able to explain how an AI system affects people and to consider risks to vulnerable groups when designing or adopting AI.

Practically, this means carrying out proportionate checks, such as a data protection impact assessment (DPIA) when an AI will process learners' personal data, ensuring contractual clarity with third-party AI vendors about how training data is handled, and keeping records that justify the choices you make about using AI. The ICO has also run consultations and published practical positions on generative AI, which help shape what is expected from organisations.

Who is Responsible? Humans or Robots?

Responsibility is shared but not optional. If a centre uses AI to help set or mark assessments, the centre and awarding organisation remain accountable for the validity, fairness and security of those assessments. Human judgement cannot be outsourced entirely: professional assessors must design, sample and verify assessment decisions, and must be able to justify them.

Regulators such as Ofqual have set out their approach to regulating AI in the qualifications sector, emphasising fairness, validity, security and public confidence. That means assessment bodies should embed AI governance into existing quality assurance and malpractice processes, not bolt it on as an afterthought.

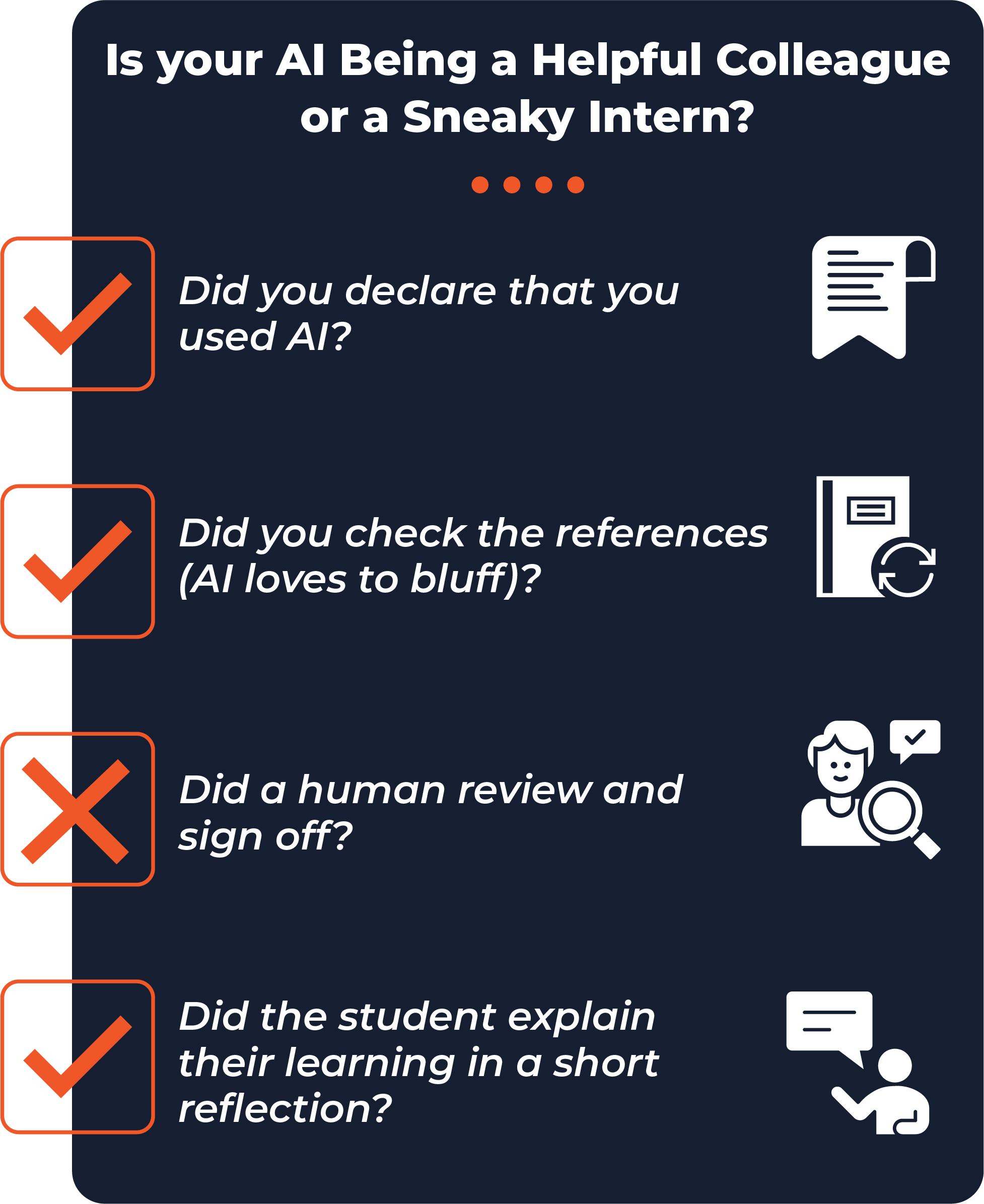

For Assessors

Treat the AI output to draft feedback or suggest marks as a draft that requires your expert review.

Think of AI as a caffeinated intern: helpful, but you still need to sign off on their work.

For Learners

Be explicit about what level of AI assistance is allowed, how to reference it, and what counts as malpractice.

Academic Integrity: How To Remain Fair Without Being The 'AI Police'

AI challenges traditional ways of detecting misconduct. Detection tools are imperfect and can miss or misclassify AI-generated text. That is why a holistic approach is better: design assessments that ask learners to demonstrate applied knowledge in ways AI finds harder to mimic (for example, workplace-based observation, professional discussion, viva voce or timed supervised tasks), embed reflective practice that asks learners to explain decisions in context, and teach students about responsible AI use as a professional skill. Sector bodies such as Jisc and QAA provide resources on maintaining academic integrity and using AI constructively rather than purely punitively.

Also, remember equality and access. Some learners rely on AI for accessibility reasons; blanket bans can disadvantage those learners. Instead, adopt nuanced policies that allow legitimate, declared assistive uses while still protecting assessment validity.

AI Can Lie: Bias, Hallucinations, and What to Watch

Generative models can reproduce bias present in their training data and 'hallucinate' invented facts, references or citations that look plausible but are false. For assessors, this matters because marking or feedback based solely on AI output may propagate errors and unfairness. Likewise, using AI systems that ingest learner work as training data could expose learners' personal details if the vendor's usage terms permit model training. Always check vendor terms, data retention policies and whether the supplier uses learner data to improve models.

What Should a Centre Policy Cover? Your AI Playboo

A robust AI policy for a training centre or awarding organisation should, at minimum, set out: permitted and prohibited AI uses for learners and staff; requirements for declaring AI assistance in submissions; how AI will be used in assessment design and marking; procedures for sampling and authentication; responsibilities for data protection and vendor management; and proportionate sanctions in line with existing malpractice rules. The policy should be communicated clearly to learners and staff, and reviewed regularly as guidance from regulators evolves. Many awarding organisations and regulators have published model guidance and templates which centres can adapt.

How To Keep Marking Quality High When AI Is in the Loop?

Treat AI as an assistant — not the examiner. Keep human assessors in the loop for sampling, moderation and final decisions. Use professional discussion or short, targeted follow-up tasks to confirm learners' understanding. Make sure internal quality assurance (IQA) processes check that AI outputs are appropriately edited, evidenced, referenced, and that calculations are accurate when AI tools are used to generate tables or citations. If your awarding body requires it, ensure external quality assurance (EQA) samples show how authenticity was assured.

Navigating the AI Era with Confidence

Generative AI is no longer a futuristic idea — it is here, reshaping the way learners study, tutors teach, and assessors mark. While the opportunities are exciting, they come with clear responsibilities: protecting learner data, maintaining academic integrity, and ensuring fair, valid assessments. By combining human oversight with responsible AI use, embedding clear policies, and providing learners with guidance, education professionals can harness AI safely and effectively.